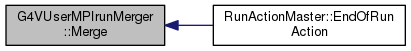

139 DMSG(0,

"G4VUserMPIrunMerger::Merge called");

140 const unsigned int myrank = MPI::COMM_WORLD.Get_rank();

141 commSize = MPI::COMM_WORLD.Get_size();

142 if ( commSize == 1 ) {

143 DMSG(1,

"Comm world size is 1, nothing to do");

146 COMM_G4COMMAND_ = MPI::COMM_WORLD.Dup();

148 const G4double sttime = MPI::Wtime();

151 typedef std::function<void(unsigned int)> handler_t;

152 using std::placeholders::_1;

155 std::function<void(void)> barrier =

156 std::bind(&MPI::Intracomm::Barrier,&COMM_G4COMMAND_);

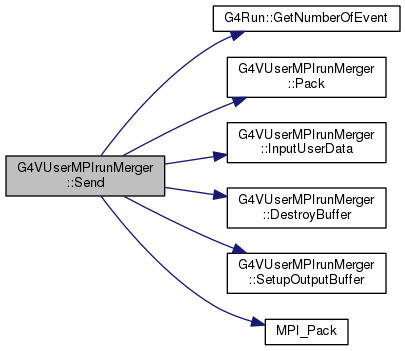

157 G4mpi::Merge( sender , receiver , barrier , commSize , myrank );

174 const G4double elapsed = MPI::Wtime() - sttime;

176 COMM_G4COMMAND_.Reduce(&bytesSent,&total,1,MPI::LONG,MPI::SUM,

178 if ( verbose > 0 && myrank == destinationRank ) {

180 G4cout<<

"G4VUserMPIrunMerger::Merge() - data transfer performances: "

181 <<double(total)/1000./elapsed<<

" kB/s"

182 <<

" (Total Data Transfer= "<<double(total)/1000<<

" kB in "

183 <<elapsed<<

" s)."<<

G4endl;

188 COMM_G4COMMAND_.Free();

189 DMSG(0,

"G4VUserMPIrunMerger::Merge done");

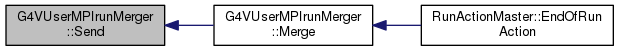

void Merge(std::function< void(unsigned int)> senderF, std::function< void(unsigned int)> receiverF, std::function< void(void)> barrierF, unsigned int commSize, unsigned int myrank)

G4GLOB_DLL std::ostream G4cout

void Send(const unsigned int destination)

G4double total(Particle const *const p1, Particle const *const p2)

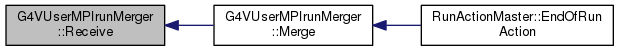

void Receive(const unsigned int source)